Metabase is the easy, open source way for everyone in your company to ask questions and learn from data. Running Metabase on Heroku Heroku is a great place to evaluate Metabase and take it for a quick spin with just a click of a button and a couple minutes of waiting time.

Building a SaaS product, a system to handle sensor data from an internet-connected thermostat or car, or an e-commerce store often requires handling a large stream of product usage data, or events. Managing event streams lets you view, in near real-time, how users are interacting with your SaaS app or the products on your e-commerce store; this is interesting because it lets you spot anomalies and get immediate

- Metabase-buildpack - Buildpack for Heroku. Buildpack for Heroku. Buildpack for Heroku. Skip Navigation Show nav Heroku. Heroku Platform. Heroku Flow; Continuous Delivery; Continuous Integration; Heroku OpEx; Heroku Runtime. Heroku Dynos; Heroku Data Services. Heroku Postgres; Heroku.

- The button below will deploy the branch where this README.md lives onto Heroku. Metabase developers use it to deploy branches of Metabase to test our PRs, etc. We DO NOT recommend you using this for production. Instead, please use a stable build.

Once the data is in Redshift, we can write ad-hoc queries and visualize the data using trend analysis and data dashboards using a SQL-compliant analytics tool. This example uses Metabase deployed to Heroku. Metabase is an open-source analytics tool used by many organizations, large and small, for business intelligence. It has many similar capabilities as Tableau or Looker.

Managing Event Streams with Apache Kafka & Node.js

To get this system up and running we first need to simulate our product usage events. To do this we’ve created a simple Node.js app generate_data. generate_data bootstraps a batch ShoppingFeed of simulated events that represents user interactions on our fictitious website such as adding a product to a wishlist or shopping cart. Since we’re generating millions of events we’ll use Kafka to manage all of these events.

generate_datasends each product data event to the configured Kafka topic on a single partition using no-kafka, an open source Kafka client for Node.js.

To try this yourself, first get the source.

Then create an empty Heroku app and provision a fully-managed Kafka cluster on Heroku (this takes just a few seconds for a multi-tenant plan and less than 10 minutes for a dedicated cluster) to the app; once provisioned, create a topic called ecommerce-logs where we will send all of our events and then deploy the sample codebase to your Heroku app. Notice we also created a consumer group for later use by downstream consumers.

You’ll also need to ensure your app's environment variables are set via config vars in Heroku: Kafka topic, cluster URL, and client certificates. This is all done for you if you follow the directions above. Note: using Apache Kafka will incur costs on Heroku.

Real-time Stream Visualization

Given the holidays are fast approaching, let’s visualize the usage of the ‘wishlist’ feature of our fictitious store in near real-time by our users. Why might we want to do this? Let’s say the marketing team wants to drive engagement leading to more sales by promoting the wishlist feature—as the product development team, we’d like to give them the ability to see if users are responding as soon as the campaign goes out, both for validation but also to detect anomalies.

To do this we’ll use the viz app mentioned earlier to consume events from Kafka that represent adds to the wishlist and display the average volume by product category in a D3 stacked, stream chart. If a product category were missing from the wishlist, we could easily see it below and dig deeper to find the source of the error.

Underneath the hood viz is just a Node.js Express app listening to Kafka and passing the events out on a WebSocket; we use a Simple Kafka Consumer from no-kafka to consume events from the same topic we produced to earlier with generate_data.

We can then broadcast events to the WebSocket server for visualization.

Real-time Product Analytics Using Amazon Redshift and Metabase

While the ability to visualize how our users are interacting with our app in real-time using event streams is nice, we need to be able to ask deeper questions of the data, do trend and product analysis, and provide our business stakeholders with dashboards.

With Kafka, we can easily add as many consumers as we like without impacting the scalability of the entire system and preserve the immutability of events for other consumers to read. When we deployed our example system earlier we also deployed redshift_batch, a Simple Kafka Consumer that uses consumer groups that allows us to horizontally scale consumption by adding more dynos (containers).

redshift_batch has a few simple jobs: consume events, send those events to Amazon Redshift for datawarehousing, and then commit the offsets of messages it has successfully processed. Connecting and writing to Redshift from Heroku is simply a matter of using pg-promise—an interface for PostgreSQL built on top of node-postgres—with a DATABASE_URL environment variable. You’ll also need to create the table in Redshift to receive all the events.

Note for all this to work you’ll need an Amazon Redshift cluster and a few other resources; check out this Terraform script for an automated way to create a RedShift cluster along with a Heroku Private Space and a private peering connection between the Heroku Private Space and the RedShift's AWS VPC. Note: this will incur charges on AWS and Heroku, and requires a Heroku Enterprise account.

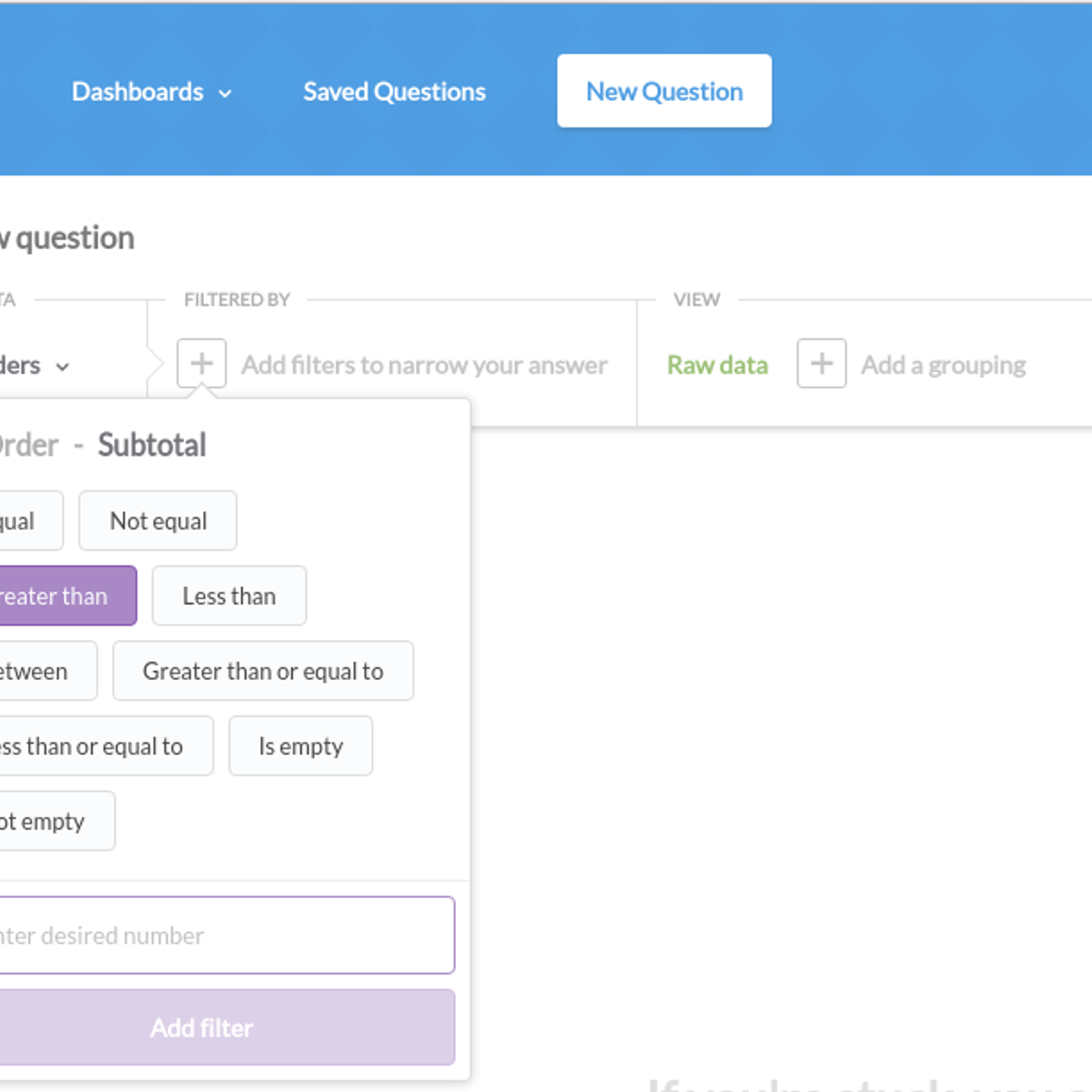

The final piece of our system is the ability to use SQL to query and visualize data in Redshift; for this we can use Metabase, a free open-source product analytics tool that you can deploy to Heroku using Metabase's Heroku Button. Once deployed, you'll need to configure Metabase with the RedShift cluster URL, database name, username, and password.

Now members of your team can kick back, use Metabase’s point and click interface, write ad-hoc SQL queries, and create product analytics dashboards on top of the millions of events your users generate daily, trended over time.

Summary

Event-driven architectures and real-time analytics are an important feature of a modern web app on Heroku. In that post, we mentioned that customer facing applications are now at the core of every business; those same applications are continuously producing data in real-time at an accelerated rate. Similar to the way applications and code are now containerized, orchestrated and deployed continuously, high-volume event streams must be managed and moved in an organized way for visualization and analysis. We need to extend our web app with a system comprising

- A tool that can manage large streams of data reliably and to which data producers and data consumers can easily attach themselves

- A data warehouse tool to organize the data and make it queryable quickly

- Data query and visualization tools that are simple to connect to the data warehouse and easy to start using

Specifically in this post, we've covered just one implementation of the lower-right of this diagram: events data 'firehose', Amazon Redshift and Metabase.

And while the implementation of this stream processing system can be as simple or complex as you want, Heroku simplifies much of the DevOps of this system via a fully managed Kafka service that is integrated with consuming and producing Heroku apps that can be horizontally and vertically scaled. Heroku’s easy and secure composability allow the data to be moved into an external infrastructure service like Amazon Redshift for analysis with open source tools that can run on Heroku. The system in this post can be provisioned on Heroku in 15 minutes and was built with a team of 2 developers working sporadically over a two week period. Once provisioned it can run with minimal overhead.

I want to extend a huge thank you to those who helped me write or otherwise contributed to this post: Chris Castle, Charlie Gleason, Jennifer Hooper, Scott Truitt, Trevor Scott, Nathan Fritz, and Terry Carter. Your code, reviews, discussions, edits, graphics, and typo catches are greatly appreciated.

Footnotes

1While I refer to generate_data, viz, and redshift_batch as apps, they are actually three process types running within the same Heroku app. They could have instead been deployed as three separate Heroku apps. The project was architected as one Heroku app to simplify initial deployment to make it easier for you to test out. Sometimes this is referred to as a monorepo—i.e. code for multiple projects stored in a single repository.

v0.39.0.1 / Operations Guide / Running Metabase on Heroku

Heroku is a great place to evaluate Metabase and take it for a quick spin with just a click of a button and a couple minutes of waiting time. If you decide to keep your Metabase running long term we recommend some upgrades as noted below to avoid limitations of the Heroku free tier.

Launching Metabase

Before doing anything you should make sure you have a Heroku account that you can access.

If you’ve got a Heroku account then all there is to do is follow this one-click deployment button

This will launch a Heroku deployment using a GitHub repository that Metabase maintains.

It should only take a few minutes for Metabase to start. You can check on the progress by viewing the logs at https://dashboard.heroku.com/apps/YOUR_APPLICATION_NAME/logs or using the Heroku command line tool with the heroku logs -t -a YOUR_APPLICATION_NAME command.

Upgrading beyond the Free tier

Heroku is very kind and offers a free tier to be used for very small/non-critical workloads which is great if you just want to evaluate Metabase and see what it looks like. If you like what you see and decide to use Metabase as an ongoing part of your analytics workflow we recommend these upgrades which are quite affordable and will allow you to fully utilize all of Metabase’s capabilities without running into annoying limitations.

Upgrade your dyno to the

Hobbytier or one of the professionalStandard1x/2x dynos. The most important reason for this is that your dyno will never sleep and that allows Metabase to run all of its background work such as sending Pulses, syncing metadata, etc, in a reliable fashion.Upgrade your Postgres database to the

Basicpackage or for more peace of mind go for theStandard 0package. The primary reason for this upgrade is to get more than the minimum number of database rows offered in the free tier (10k), which we’ve had some users exhaust within a week. You’ll also get better overall performance along with backups, which we think is worth it.

Known Limitations

- Heroku’s 30 second timeouts on all web requests can cause a few issues if you happen to have longer running database queries. Most people don’t run into this but be aware that it’s possible.

- When using the

freetier, if you don’t access the application for a while Heroku will sleep your Metabase environment. This prevents things like Pulses and Metabase background tasks from running when scheduled and at times makes the app appear to be slow when really it’s just Heroku reloading your app. You can resolve this by upgrading to thehobbytier or higher. - Sometimes Metabase may run out of memory and you will see messages like

Error R14 (Memory quota exceeded)in the Heroku logs. If this happens regularly we recommend upgrading to thestandard-2xtier dyno.

Now that you’ve installed Metabase, it’s time to set it up and connect it to your database.

Troubleshooting

- If your Metabase instance is getting stuck part way through the initialization process and only every shows roughly 30% completion on the loading progress.

- The most likely culprit here is a stale database migrations lock that was not cleared. This can happen if for some reason Heroku kills your Metabase dyno at the wrong time during startup. To fix it: you can either clear the lock using the built-in release-locks command line function, or if needed you can login to your Metabase application database directly and delete the row in the

DATABASECHANGELOGLOCKtable. Then just restart Metabase.

- The most likely culprit here is a stale database migrations lock that was not cleared. This can happen if for some reason Heroku kills your Metabase dyno at the wrong time during startup. To fix it: you can either clear the lock using the built-in release-locks command line function, or if needed you can login to your Metabase application database directly and delete the row in the

Deploying New Versions of Metabase

We currently use a Heroku buildpack for deploying Metabase. The metabase-deploy repository is an app that relies on the buildpack and configures various properties for running Metabase on Heroku.

In order to upgrade to the latest version of Metabase on Heroku, you need to trigger a rebuild of your app. Typically this is done by pushing to the Heroku app’s Git repository, but because we create the app using a Heroku Button, you’ll need to link your app to our repo and push an empty commit.

Here’s each step:

- Clone the metabase-deploy repo to your local machine:

- Add a git remote with your Metabase setup:

Metabase Heroku Update

- If you are upgrading from a version that is lower than 0.25, add the Metabase buildpack to your Heroku app:

- If there have been no new changes to the

metabase-deployrepository since the last time you deployed Metabase, you will need to add an empty commit. This triggers Heroku to re-deploy the code, fetching the newest version of Metabase in the process.

Deploy Heroku Metabase

- Wait for the deploy to finish

Heroku Metabase

Testing New Versions using Heroku Pipelines

Heroku pipelines are a feature that allow you to share the same codebase across multiple Heroku apps and maintain a continuous delivery pipeline. You can use this feature to test new versions of Metabase before deploying them to production.

In order to do this, you would create two different Metabase apps on Heroku and then create a pipeline with one app in staging and the other in production.

In order to trigger automatic deploys to your staging app, you will need to fork the metabase-deploy repository into your own GitHub user or organization and then enable GitHub Sync on that repository. To do this, connect the pipeline to your forked repository in the pipeline settings, then enable automatic deploys in the app you added to the staging environment.

Now, when you push to master on your forked metabase-deploy repository, the changes will automatically be deployed to your staging app! Once you’re happy that everything is OK, you can promote the staging app to production using the Heroku UI or CLI.

Similar to the instructions above, to deploy a new version you just need to push an empty commit and the build will pick up the new version.

Database Syncs

You may want to ensure that your staging database is synced with production before you deploy a new version. Luckily with Heroku you can restore a backup from one app to another.

For example, assuming your production app is named awesome-metabase-prod, this command will create a backup:

Note the backup ID referenced in the above command and use that to restore the database to your staging app, awesome-metabase-staging.

This will restore backup ID b101 from your prod app to your staging app.

Once this is done, restart your staging app and begin testing.

Pinning Metabase versions

For whatever reason, should you want to pin Metabase to a specific version, you can append the version number to the buildpack URL (as long as that tag exists in the metabase-buildpack repository).

If you haven’t cloned the metabase-deploy repository, this can be done with the Heroku CLI:

If you are using pipelines as shown above, you can modify the app.json file in your forked metabase-deploy repository to use a tagged buildpack URL for the Metabase buildpack. You can then commit and push that change and promote your app when ready.